- Learn Apache Airflow today: find your Apache Airflow online course on Udemy.

- Apache Airflow Tutorial – Part 1 Introduction. Apache airflow distributed computing docker python Mar 08, 2019. What is Apache Airflow? Briefly, Apache Airflow is a workflow management system (WMS). It groups tasks into analyses, and defines a logical template for when these analyses should be run. Then it gives you all kinds of amazing.

Basic Airflow concepts¶

- Task: a defined unit of work (these are called operators in Airflow)

- Task instance: an individual run of a single task. Task instances also have an indicative state, which could be 'running', 'success', 'failed', 'skipped', 'up for retry', etc.

- DAG: Directed acyclic graph,a set of tasks with explicit execution order, beginning, and end

- DAG run: individual execution/run of a DAG

This tutorial walks you through some of the fundamental Airflow concepts, objects, and their usage while writing your first pipeline.

Debunking the DAG

The vertices and edges (the arrows linking the nodes) have an order and direction associated to them

each node in a DAG corresponds to a task, which in turn represents some sort of data processing. For example:

Node A could be the code for pulling data from an API, node B could be the code for anonymizing the data. Visio electrical engineering stencil downloads. Node B could be the code for checking that there are no duplicate records, and so on. Colocalizer pro 6 0 air filter.

These ‘pipelines' are acyclic since they need a point of completion.

Dependencies

Each of the vertices has a particular direction that shows the relationship between certain nodes. For example, we can only anonymize data once this has been pulled out from the API.

Airflow Tutorial Medium

Update your local configuration¶

Open your airflow configuration file ~/airflow/airflow.cf and make the following changes:

Here we are replacing the default executor (SequentialExecutor) with the CeleryExecutor so that we can run multiple DAGs in parallel.We also replace the default sqlite database with our newly created airflow database.

Dependencies

Each of the vertices has a particular direction that shows the relationship between certain nodes. For example, we can only anonymize data once this has been pulled out from the API.

Airflow Tutorial Medium

Update your local configuration¶

Open your airflow configuration file ~/airflow/airflow.cf and make the following changes:

Here we are replacing the default executor (SequentialExecutor) with the CeleryExecutor so that we can run multiple DAGs in parallel.We also replace the default sqlite database with our newly created airflow database.

Now we can initialize the database:

Let's now start the web server locally:

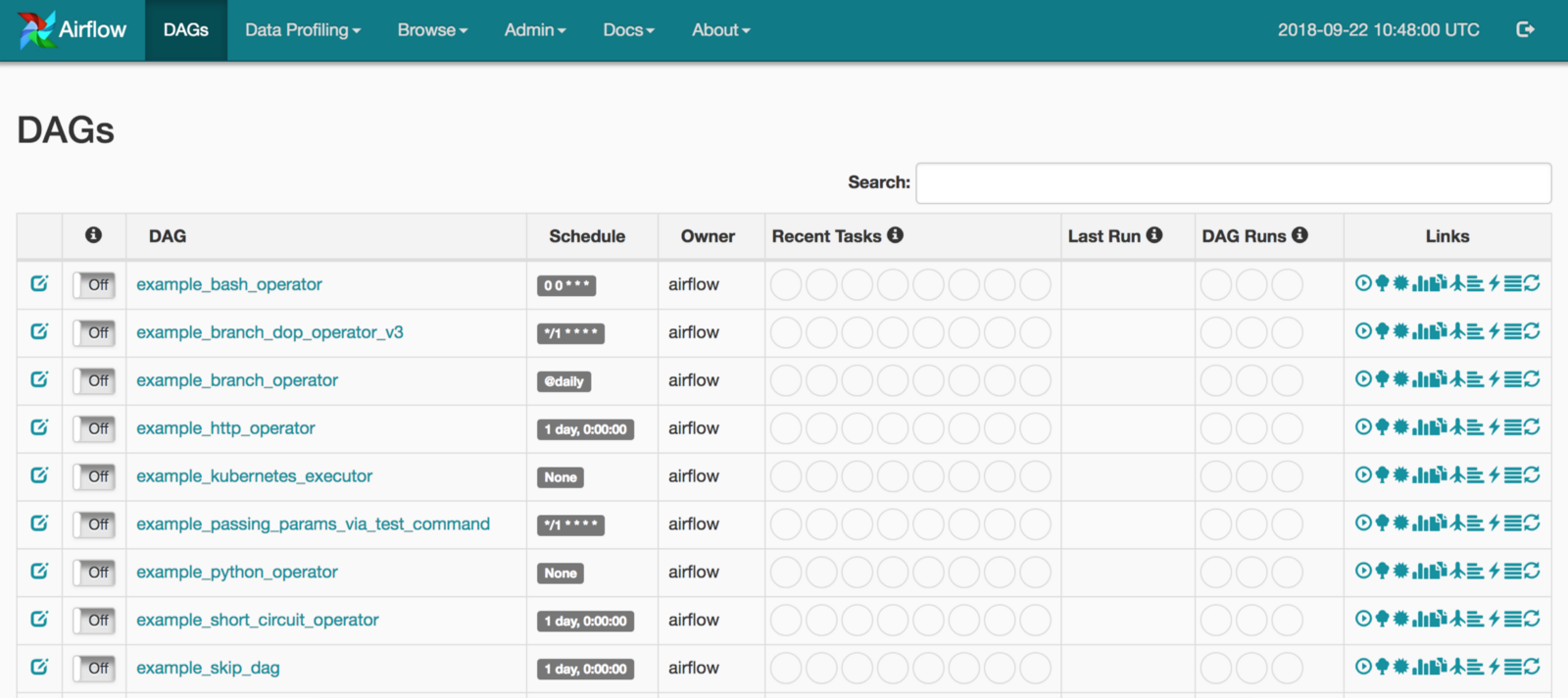

we can head over to http://localhost:8080 now and you will see that there are a number of examples DAGS already there.

🚦 Take some time to familiarise with the UI and get your local instance set up

Now let's have a look at the connections (http://localhost:8080/admin/connection/) go to admin>connections. You should be able to see a number of connections available. For this tutorial, we will use some of the connections including mysql.

Commands¶

Let us go over some of the commands. Back on your command line:

we can list the DAG tasks in a tree view

we can tests the dags too, but we will need to set a date parameter so that this executes:

(note that you cannot use a future date or you will get an error) Activedock 1 1 3.

By using the test commands these are not saved in the database. Monit 2 0 3.

Now let's start the scheduler:

Airflow Tutorial Video

Behind the scenes, it monitors and stays in sync with a folder for all DAG objects it contains. The Airflow scheduler is designed to run as a service in an Airflow production environment.

Now with the schedule up and running we can trigger an instance:

Serum mac. This will be stored in the database and you can see the change of the status change straight away.

What would happen for example if we wanted to run or trigger the tutorial task? 🤔

Let's try from the CLI and see what happens.